Is It Time for a New AI?

A Call to Stop the Slop

(Illustration credit: Arts & Science Magazine)

“We can only be human together.”

- Desmond Tutu

Yeah … it has been a long silence.

Not the silence of distraction, but of watching. Listening. Since I last wrote, the world of AI has taken a turn. Each week arrives with another launch: an app for infinite video loops, a new way to optimize porn, a headline stating that over 50% of digital content is now synthetic. The pace itself has become the message, drowning out the question we should be asking more often: “What are we actually building, and why?”

Everywhere I looked, one pattern repeated: systems automating other systems.

Recursively. Relentlessly. A kind of technological ouroboros, consuming its own tail with increasing velocity. Even our metaphors are running out of breath. We’ve moved past “disruption” and “transformation” into something more unsettling: displacement without destination.

In January 2025, AI promised to “cure cancer”. By October, we’re building a social media for AI-characters and videos. This isn’t just mission drift. It’s a profound confusion about what problems we’re solving, and for whom.

The pattern is historical, almost rhythmic in its predictability:

Late 2022 “the ChatGPT-3.5 explosion”: a Cambrian moment of wrappers, copilots, agents, and automation tools. Headlines celebrated the achievement “intelligence at scale”, “creativity for all”, “work reimagined”, “the largest productivity boost in history” …

Late 2024, the reckoning arrived. Much of was produced has been brittle, shallow, repetitive. Headlines now warned us of bubbles “17 times the size of the dot-com bubble and four times bigger than the 2008 real-estate bubble…”

In late 2025, we’re now entering a different phase entirely, one characterized by cognitive fatigue from relentless noise and automation. It’s an exhaustion of constantly adapting to tools that themselves are constantly changing, never stabilizing long enough to let these tools shine.

This isn’t just a wave. It’s another cultural compression event, a historical pattern we’ve seen before:

New technology → prematurely monetized → collapses into noise → leaves behind a residue of its potential

From Expansion to Extraction

Every technology begins as a promise of expansion. But almost always, it drifts toward extraction.

The printing press gave us liberation, then propaganda. The factory promised progress, delivered exhaustion.The internet began as an open commons, became a surveillance machine wrapped in dopamine loops.

There’s something almost mythological in all of this … not quite a curse, but perhaps closer to what the Greeks called hubris: the belief that we can wield power without being shaped by it. We reach for tools to extend our capabilities, only to discover the tools have quietly rewritten the terms of our existence.

And maybe that’s the real question here … not what these technologies do, but what they reveal. About us. About the world we’ve built to contain ourselves.

They’ve exposed our hunger for validation, made visible our fractured attention, laid bare our growing fatigue with one another. We are surrounded by symptoms (addiction to notifications, anxiety spirals, the hollow feeling after scrolling), but it’s remarkably hard to see what caused the disease when everyone is busy self-diagnosing.

We talk about the AI bubble, the misuse of data, the environmental cost.

All true. All urgent. But maybe the real story is simpler … just painful to accept.

The Logic Beneath the Slop

We’ve been running the world on autopilot for longer than AI has existed. We automated our attention before algorithms did … checking boxes, following scripts, performing the motions of meaning without pausing to ask if the motions themselves still mean anything.

AI isn’t inventing a new problem. It isn’t making decisions on its own, at least not yet. The technology is still plugged into an operating system powered by us, running on a logic we built long ago.

Consider the script we’ve internalized about value creation at work (what signals worth):

Chasing titles and algorithms that confer borrowed significance. Performing sincerity in carefully composed posts. Sculpting our presence around what we hope others will accept. Post-morteming every conversation for signs we said the wrong thing. Confusing validation with connection. Mistaking activity for purpose. Velocity for direction.

So yes, I worry about the bubble. I worry about the environmental cost of data centers burning energy to generate content no human will be able to ever consume. But I worry too about the logic that produces them … the one that turns everything luminous into garbage, that industrializes even our imagination.

I’m genuinely impressed when I see a startup hit $200M ARR in record time, for example. That’s no small feat. But what unsettles me is what happens next: the echo chamber kicks in. I sit on calls where all I hear is, “How can we replicate that?” Not: “What did they build? What value did it create? Who did it help?” But “how do we engineer the ARR curve?”

The nuance is erased. The context stripped. The founder’s unique insight flattened into a playbook. Why must everything become a script? Why can’t we celebrate someone’s success and then rather than reproduce their path … be inspired to find our own?

The Slop Machine Economy

What’s happening in AI today isn’t a crisis of technology. Or application. It’s a crisis of direction, a collective forgetting of what we’re building toward.

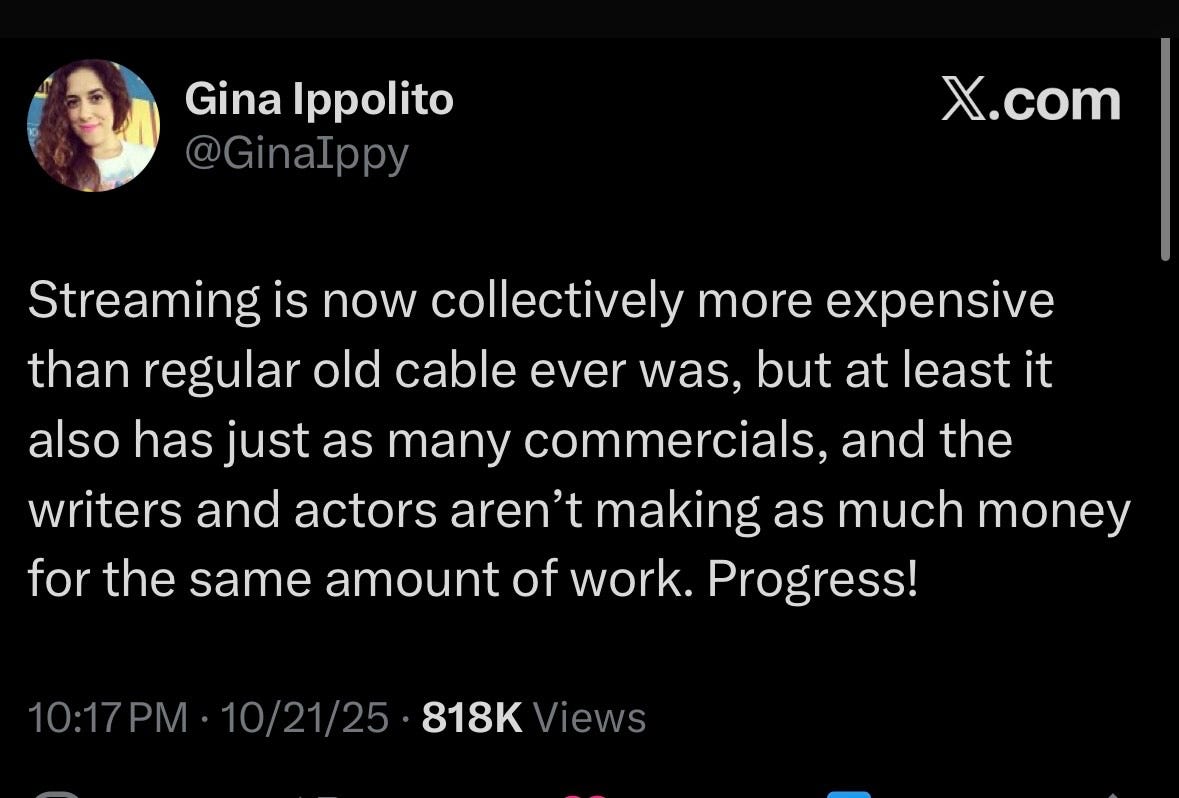

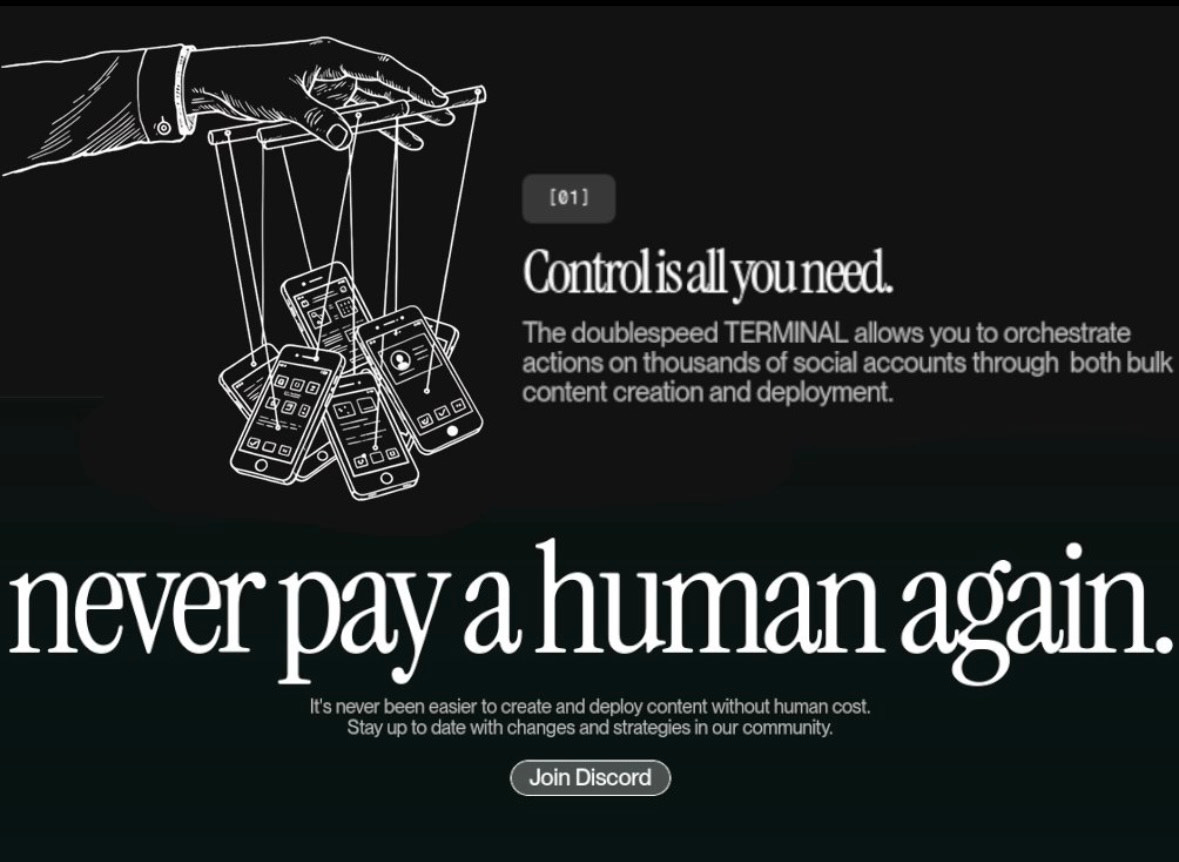

Consider the logic that produces ads promising “Never Pay a Human Again” as if human labor were merely friction to be eliminated, as if the point of technology were to remove us from our own lives. It sounds like liberation. It’s not. It’s the final stage of a process that began long before AI: the reduction of all human activity to cost, our humanity to overhead.

And before we dismiss this as an outlier, some fringe startup’s tasteless marketing, it’s worth noting that company was funded with millions from Andreessen Horowitz. This isn’t a glitch in the system. It is the system, functioning exactly as designed.

For two months, I sat inside the industry with founders, investors, and “visionaries”/”leaders” trying to understand it from within.

I found no evil there. Only emptiness.

Rooms full of brilliant people who no longer knew what the work was for. It felt like walking into a hospital where no one remembers what medicine is, where everyone is optimizing bed turnover and insurance codes, but the question of healing has quietly disappeared from the conversation.

One founder told me his origin story: he’d started with something beautiful … a voice assistant for people who had lost their voice. Real need. Clear business. Six months later, he was selling AI to generate AI-written content for LinkedIn.

He said it was more profitable. More scalable.

He didn’t look proud. Just tired. This is the pattern. Not villains in towers plotting harm, but people slowly forgetting why they started. The current is strong, and most of us (myself included) have felt it pulling. For a while, I drifted too. And maybe that’s why this moment feels so personal, so painful.

At the top of the economic pyramid, where deals move, capital concentrates, and innovation takes shape, sit the investors. They once navigated uncertainty through relationships: a trusted introduction, a quiet reputation, a pattern of credibility built over time. These weren’t just social ties; they were a kind of epistemological infrastructure, I would say, a distributed system for sensing meaning amid the noise.

But those same networks have begun to calcify. What was once fluid and trust-based has turned into a wall: a defense mechanism against the flood of noise and spam that shows no sign of slowing down.

One investor told me he used to meet every founder who reached out through a mutual connection. “Now,” he said, “I can’t even tell who’s real.”

The scale is unprecedented. Analysts estimate over 60,000 AI startups launched last year, with thousands more each month. Most build variations of the same thing: copilots, chatbots, workflow optimizers. Perhaps one in a hundred raises meaningful capital. The rest compete in an economy of “slop” with identical decks, recycled interfaces, synthetic traction metrics that look like growth but measure nothing real.

The flip side of “vibe coding” is product slop: bad software wrapped in good aesthetics, fake users inflating vanity metrics, companies chasing valuation rather than value. And here’s the bitter irony: automation was supposed to bring clarity. Instead, it destroyed the very thing these investors once relied on: the subtle, embodied sense of signal that guided intuition before it could be named.

The same incentives have trickled down into the startup ecosystem. Many startups have quietly pivoted toward slop because it’s the path of least resistance to monetization. Flood the web with AI-generated content. Chase distribution through SEO arbitrage and engagement loops engineered to trigger sharing reflexes.

The logic is elegantly simple: volume equals visibility equals “winning.”

Meanwhile, solving hard problems has never been harder.

Every industry now hosts dozens of AI startups competing for the same narrow spotlight. Because most are built on the same foundation models, imitation is effortless. What once required years of research can be replicated in a weekend. So founders follow the gradient: build what scales fast, costs little to produce, demos well in a pitch meeting. Optimize for whatever captures attention, because attention is what raises the next round.

The result is an economy of slop … with products that look different but think identically. Instead of deep innovation, we get mass production at software speed: a deluge of cheap, interchangeable tools that solve problems no one actually has.

And it’s not malice driving this. It’s the system’s internal logic. When speed and reach are rewarded over substance and craft, when “traction” matters more than transformation, slop becomes not just inevitable but rational.

I watched it happen.

One startup began with genuine promise: automating repetitive content production so marketers could focus on strategy and storytelling … the work that actually requires human judgment. Within weeks, it had become a slop factory. Thousands of ads generated daily, each slightly different, none remotely alive.

At first, humans gave input. Then, gradually, automation automated itself. The marketers were laid off for ”optimization,” they called it. Eventually, no one was in the room anymore. Bots wrote the content. Bots replied to the content. Bots praised the content.

It was a closed loop, a system talking to itself.

And how is that system talking to itself now?

It’s a bit insane what’s happening. When Sam Altman posted on X that the internet might be “dead,” I didn’t quite grasp it at first. It felt abstract, provocative, maybe even performative. But then I saw it firsthand.

Not as a philosopher. As a marketer.

I was helping a startup grow its audience. The CMO dashboard looked promising: views climbing, clicks accumulating, conversions trending upward. But when we examined it closely, the numbers weren’t measuring reality, they were measuring “the appearance” of reality.

The views weren’t human. The comments were fake bots. The engagement was orchestrated by systems designed to engage themselves, to generate the semblance of an audience where none existed.

Google now offers AI-assisted ad creation: generate an entire campaign in seconds, complete with AI-made videos, stock faces arranged into families that never existed, scripts written by models trained on a decade of manipulative copy. One click floods the internet with polished fabrications that mimic authenticity so well that most people scroll past without noticing the uncanny emptiness.

A Google Ads account manager admitted to me on a call: “It’s pure garbage. Don’t use it.”

Yet the system works … I guess … in the narrow sense that it produces metrics, generates reports, creates the appearance of activity. The more efficiently it functions, the less anyone is actually there.

Even creators, once the beating heart of the internet, the ones who built genuine communities through vulnerable, idiosyncratic presence aren’t immune. I’ve watched major influencers try earnestly to reconnect: heartfelt captions, cinematic production value, intimate confessional tones.

The posts gather thousands of likes. Almost no real conversation.

Look closer at the comments: broken grammar, repeated emojis, templated phrases that sound like enthusiasm without being it. Entire threads constructed by bots pretending to be fans. Authenticity simulated. Connection automated.

The result is eerie … creators performing sincerity to an audience that has quietly evaporated, replaced by systems trained to simulate the responses that once meant someone was listening.

It’s “influence without influence”: virality without life, reach without substance. Behind the sheen of engagement lies a vacuum. A stage full of ghosts applauding on cue.

One brand executive looked up from the quarterly report and asked the most honest question I’d heard in months:

“How do we find real people?”

That question became a metric. A strategic priority. A line item in the budget.

Find the humans.

The call for a New AI

The paradigm for how we build has to shift. Not because we’re moving slowly … that’s not it. AI is already outpacing our capacity to reflect on what we’re creating. But because speed without intentionality has become the problem.

Think of it this way: we’re like architects who’ve become so efficient at construction that we’ve forgotten to ask whether we’re building homes or prisons. The blueprints look identical from above. The difference only becomes clear once people try to live inside.

If we don’t change this paradigm, we’ll simply produce more of what we already have …faster, louder, more empty. We’ve made acceleration a virtue and reflection a liability. We’ve mistaken velocity for direction, activity for purpose.

But something is shifting.

The “hustler founder” era … the fast-talking, VC-charming, meme-posting operator phase … is reaching its natural limit. It’s unsustainable, not because it’s wrong but because it’s exhausting. Because the people living it are burning out. Because the returns are diminishing while the costs compound.

We’re entering what will look, in retrospect, like a post-AI shock vibe shift:

Late 2022 brought the ChatGPT explosion. Everyone scrambling to build wrappers, copilots, agents, automations. The air thick with promise.

2024–2025 brought the reckoning. Most of it proved samey, brittle, shallow. AI didn’t fix work; it accelerated the slop already there. And now, slowly, a different realization: we need to build this technology with intention and integrity, or we shouldn’t build it at all.

We’re now entering a period characterized by:

Cognitive fatigue from relentless noise and automation. The exhaustion of adapting to systems that never stabilize long enough to become genuinely useful.

Emotional dissonance: the widening gap between what technology promises and what it delivers, between the future we were sold and the one we’re actually inhabiting.

In the aftermath, we don’t just need faster tools. We need new ways of thinking through the maze we’ve built. It’s time for a different kind of AI (let’s cal it “AI 2.0”), one that helps us make sense of what we’ve lost, because somewhere along the way, we lost our direction.

In labs across the world (Berkeley, Princeton, Stanford), researchers are quietly doing something else. In chemistry, scientists leverage AI agents to propose novel compounds that robots synthesize and test. In biology, our collaboration with AI are helping design experiments that accelerate genuine discovery, not just pattern matching on existing data.

These aren’t hype cycles. They point toward a different kind of AI entirely … one focused on memory, context, and relationships. On augmenting our human judgment rather than replacing it. On helping us ask better questions instead of providing faster answers.

The next wave of meaningful companies, and meaningful creative work, won’t look like blitzscaling unicorns optimizing for exit velocity.

It will look like:

Relational networks, not just conversion funnels

Deep storytelling and brand, not just growth hacking … but real connection with their users and purpose

Clear signal, not optimized prompts

Talent magnetism, not performance theater

People like you … who’ve burned out, seen through the illusion, metabolized it, and returned wiser … are quietly forming new guilds. To build outside its logic entirely.

To build differently. To choose differently.

Not because it’s easy, or trending, or backed by a term sheet. But because this moment is asking something deeper from us than optimization. It’s asking for discernment. For care. For the courage to move slowly in a world addicted to scripts and prompts.

There are early signs of this quiet shift. You can feel it in certain conversations, certain rooms. A different quality of attention. A willingness to ask questions that don’t have immediate answers.

So we arrive at a choice. Not a technological one, but a human one.

Because AI won’t tell us who we are. It won’t answer the questions we’ve stopped asking ourselves. It will only amplify the direction we’ve already chosen, make visible the logic we’ve already internalized.

The hard choices remain ours on how we will build systems that remember our humanity.

“We can only be human together.”

— Desmond Tutu

That’s not just a quote I wanted to share.

It’s a decision we all have to make.

Not can we be human together

But: will we choose to be?

This is one of the most important pieces I’ve read recently, and one I’ll share widely. Thank you for taking the time to share your thoughts on this subject!

I feel like this (great stuff) + another SS piece I read today, "The Decline of Deviance: Where has all the weirdness gone?" by Adam Mastroianni, are combining into a CTA... not sure how yet. Thanks for this.